In “Truth or Dare”, Duncan Sabien articulates a phenomenon in which expectations of good or bad behavior can become self-fulfilling: people who expect to be exploited and feel the need to put up defenses both elicit and get sorted into a Dark World where exploitation is likely and defenses are necessary, whereas people who expect beneficence tend to attract beneficence in turn.

Among many other examples, Sabien highlights the phenomenon of gift economies: a high-trust culture in which everyone is eager to help each other out whenever they can is a nicer place to live than a low-trust culture in which every transaction must be carefully tracked for fear of enabling free-riders.

I’m skeptical of the extent to which differences between high- and low-trust cultures can be explained by self-fulfilling prophecies as opposed to pre-existing differences in trust-worthiness, but I do grant that self-fulfilling expectations can sometimes play a role: if I insist on always being paid back immediately and in full, it makes sense that that would impede the development of gift-economy culture among my immediate contacts. So far, the theory articulated in the essay seems broadly plausible.

Later, however, the post takes an unexpected turn:

Treating all of the essay thus far as prerequisite and context:

This is why you should not trust Zack Davis, when he tries to tell you what constitutes good conduct and productive discourse. Zack Davis does not understand how high-trust, high-cooperation dynamics work. He has never seen them. They are utterly outside of his experience and beyond his comprehension. What he knows how to do is keep his footing in a world of liars and thieves and pickpockets, and he does this with genuinely admirable skill and inexhaustible tenacity.

But (as far as I can tell, from many interactions across years) Zack Davis does not understand how advocating for and deploying those survival tactics (which are 100% appropriate for use in an adversarial memetic environment) utterly destroys the possibility of building something Better. Even if he wanted to hit the “cooperate” button—

(In contrast to his usual stance, which from my perspective is something like “look, if we all hit ‘defect’ together, in full foreknowledge, then we don’t have to extend trust in any direction and there’s no possibility of any unpleasant surprises and you can all stop grumping at me for repeatedly ‘defecting’ because we’ll all be cooperating on the meta level, it’s not like I didn’t warn you which button I was planning on pressing, I am in fact very consistent and conscientious.”)

—I don’t think he knows where it is, or how to press it.

(Here I’m talking about the literal actual Zack Davis, but I’m also using him as a stand-in for all the dark world denizens whose well-meaning advice fails to take into account the possibility of light.)

As a reader of the essay, I reply: wait, who? Am I supposed to know who this Davies person is? Ctrl-F search confirms that they weren’t mentioned earlier in the piece; there’s no reason for me to have any context for whatever this section is about.

As Zack Davis, however, I have a more specific reply, which is: yeah, I don’t think that button does what you think it does. Let me explain.

In figuring out what would constitute good conduct and productive discourse, it’s important to appreciate how bizarre the human practice of “discourse” looks in light of Aumann’s dangerous idea.

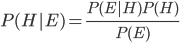

There’s only one reality. If I’m a Bayesian reasoner honestly reporting my beliefs about some question, and you’re also a Bayesian reasoner honestly reporting your beliefs about the same question, we should converge on the same answer, not because we’re cooperating with each other, but because it is the answer. When I update my beliefs based on your report on your beliefs, it’s strictly because I expect your report to be evidentially entangled with the answer. Maybe that’s a kind of “trust”, but if so, it’s in the same sense in which I “trust” that an increase in atmospheric pressure will exert force on the exposed basin of a classical barometer and push more mercury up the reading tube. It’s not personal and it’s not reciprocal: the barometer and I aren’t doing each other any favors. What would that even mean?

In contrast, my friends and I in a gift economy are doing each other favors. That kind of setting featuring agents with a mixture of shared and conflicting interests is the context in which the concepts of “cooperation” and “defection” and reciprocal “trust” (in the sense of people trusting each other, rather than a Bayesian robot trusting a barometer) make sense. If everyone pitches in with chores when they can, we all get the benefits of the chores being done—that’s cooperation. If you never wash the dishes, you’re getting the benefits of a clean kitchen without paying the costs—that’s defection. If I retaliate by refusing to wash any dishes myself, then we both suffer a dirty kitchen, but at least I’m not being exploited—that’s mutual defection. If we institute a chore wheel with an auditing regime, that reëstablishes cooperation, but we’re paying higher transaction costs for our lack of trust. And so on: Sabien’s essay does a good job of explaining how there can be more than one possible equilibrium in this kind of system, some of which are much more pleasant than others.

If you’ve seen high-trust gift-economy-like cultures working well and low-trust backstabby cultures working poorly, it might be tempting to generalize from the domains of interpersonal or economic relationships, to rational (or even “rationalist”) discourse. If trust and cooperation are essential for living and working together, shouldn’t the same lessons apply straightforwardly to finding out what’s true together?

Actually, no. The issue is that the payoff matrices are different.

Life and work involve a mixture of shared and conflicting interests. The existence of some conflicting interests is an essential part of what it means for you and me to be two different agents rather than interchangable parts of the same hivemind: we should hope to do well together, but when push comes to shove, I care more about me doing well than you doing well. The art of cooperation is about maintaining the conditions such that push does not in fact come to shove.

But correct epistemology does not involve conflicting interests. There’s only one reality. Bayesian reasoners cannot agree to disagree. Accordingly, when humans successfully approach the Bayesian ideal, it doesn’t particularly feel like cooperating with your beloved friends, who see you with all your blemishes and imperfections but would never let a mere disagreement interfere with loving you. It usually feels like just perceiving things—resolving disagreements so quickly that you don’t even notice them as disagreements.

Suppose you and I have just arrived at a bus stop. The bus arrives every half-hour. I don’t know when the last bus was, so I don’t know when the next bus will be: I assign a uniform probability distribution over the next thirty minutes. You recently looked at the transit authority’s published schedule, which says the bus will come in six minutes: most of your probability-mass is concentrated tightly around six minutes from now.

We might not consciously notice this as a “disagreement”, but it is: you and I have different beliefs about when the next bus will arrive; our probability distributions aren’t the same. It’s also very ephemeral: when I ask, “When do you think the bus will come?” and you say, “six minutes; I just checked the schedule”, I immediately replace my belief with yours, because I think the published schedule is probably right and there’s no particular reason for you to lie about what it says.

Alternatively, suppose that we both checked different versions of the schedule, which disagree: the schedule I looked at said the next bus is in twenty minutes, not six. When we discover the discrepancy, we infer that one of the schedules must have been outdated, and both adopt a distribution with most of the probability-mass in separate clumps around six and twenty minutes from now. Our initial beliefs can’t both have been right—but there’s no reason for me to weight my prior belief more heavily just because it was mine.

At worst, approximating ideal belief exchange feels like working on math. Suppose you and I are studying the theory of functions of a complex variable. We’re trying to prove or disprove the proposition that if an entire function satisfies f(x + 1) = f(x) for real x, then f(z + 1) = f(z) for all complex z. I suspect the proposition is false and set about trying to construct a counterexample; you suspect the proposition is true and set about trying to write a proof by contradiction. Our different approaches do seem to imply different probabilistic beliefs about the proposition, but I can’t be confident in my strategy just because it’s mine, and we expect the disagreement to be transient: as soon as I find my counterexample or you find your reductio, we should be able to share our work and converge.

Most real-world disagreements of interest don’t look like the bus arrival or math problem examples—qualitatively, not as a matter of trying to prove quantitatively harder theorems. Real-world disagreements tend to persist; they’re predictable—in flagrant contradiction of how the beliefs of Bayesian reasoners would follow a random walk. From this we can infer that typical human disagreements aren’t “honest”, in the sense that at least one of the participants is behaving as if they have some other goal than getting to the truth.

Importantly, this characterization of dishonesty is using a functionalist criterion: when I say that people are behaving as if they have some other goal than getting to the truth, that need not imply that anyone is consciously lying; “mere” bias is sufficient to carry the argument.

Dishonest disagreements end up looking like conflicts because they are disguised conflicts. The parties to a dishonest disagreement are competing to get their preferred belief accepted, where beliefs are being preferred for some reason other than their accuracy: for example, because acceptance of the belief would imply actions that would benefit the belief-holder. If it were true that my company is the best, it would follow logically that customers should buy my products and investors should fund me. And yet a discussion with me about whether or not my company is the best probably doesn’t feel like a discussion about bus arrival times or the theory of functions of a complex variable. You probably expect me to behave as if I thought my belief is better “because it’s mine”, to treat attacks on the belief as if they were attacks on my person: a conflict rather than a disagreement.

“My company is the best” is a particularly stark example of a typically dishonest belief, but the pattern is very general: when people are attached to their beliefs for whatever reason—which is true for most of the beliefs that people spend time disagreeing about, as contrasted to math and bus-schedule disagreements that resolve quickly—neither party is being rational (which doesn’t mean neither party is right on the object level). Attempts to improve the situation should take into account that the typical case is not that of truthseekers who can do better at their shared goal if they learn to trust each other, but rather of people who don’t trust each other because each correctly perceives that the other is not truthseeking.

Again, “not truthseeking” here is meant in a functionalist sense. It doesn’t matter if both parties subjectively think of themselves as honest. The “distrust” that prevents Aumann-agreement-like convergence is about how agents respond to evidence, not about subjective feelings. It applies as much to a mislabeled barometer as it does to a human with a functionally-dishonest belief. If I don’t think the barometer readings correspond to the true atmospheric pressure, I might still update on evidence from the barometer in some way if I have a guess about how its labels correspond to reality, but I’m still going to disagree with its reading according to the false labels.

There are techniques for resolving economic or interpersonal conflicts that involve both parties adopting a more cooperative approach, each being more willing to do what the other party wants (while the other reciprocates by doing more of what the first one wants). Someone who had experience resolving interpersonal conflicts using techniques to improve cooperation might be tempted to apply the same toolkit to resolving dishonest disagreements.

It might very well work for resolving the disagreement. It probably doesn’t work for resolving the disagreement correctly, because cooperation is about finding a compromise amongst agents with partially conflicting interests, and in a dishonest disagreement in which both parties have non-epistemic goals, trying to do more of what the other party functionally “wants” amounts to catering to their bias, not systematically getting closer to the truth.

Cooperative approaches are particularly dangerous insofar as they seem likely to produce a convincing but false illusion of rationality, despite the participants’ best of subjective conscious intentions. It’s common for discussions to involve more than one point of disagreement. An apparently productive discussion might end with me saying, “Okay, I see you have a point about X, but I was still right about Y.”

This is a success if the reason I’m saying that is downstream of you in fact having a point about X but me in fact having been right about Y. But another state of affairs that would result in me saying that sentence, is that we were functionally playing a social game in which I implicitly agreed to concede on X (which you visibly care about) in exchange for you ceding ground on Y (which I visibly care about).

Let’s sketch out a toy model to make this more concrete. “Truth or Dare” uses color perception as an illustration of confirmation bias: if you’ve been primed to make the color yellow salient, it’s easy to perceive an image as being yellower than it is.

Suppose Jade and Ruby consciously identify as truthseekers, but really, Jade is biased to perceive non-green things as green 20% of the time, and Ruby is biased to perceive non-red things as red 20% of the time. In our functionalist sense, we can model Jade as “wanting” to misrepresent the world as being greener than it is, and Ruby as “wanting” to misrepresent the world is being redder than it is.

Confronted with a sequence of gray objects, Jade and Ruby get into a heated argument: Jade thinks 20% of the objects are green and 0% are red, whereas Ruby thinks they’re 0% green and 20% red.

As tensions flare, someone who didn’t understand the deep disanalogy between human relations and epistemology might propose that Jade and Ruby should strive be more “cooperative”, establish higher “trust.”

What does that mean? Honestly, I’m not entirely sure, but I worry that if someone takes high-trust gift-economy-like cultures as their inspiration and model for how to approach intellectual disputes, they’ll end up giving bad advice in practice.

Cooperative human relationships result in everyone getting more of what they want. If Jade wants to believe that the world is greener than it is and Ruby wants to believe that the world is redder than it is, then naïve attempts at “cooperation” might involve Jade making an effort to see things Ruby’s way at Ruby’s behest, and vice versa. But Ruby is only going to insist that Jade make an effort to see it her way when Jade says an item isn’t red. (That’s what Ruby cares about.) Jade is only going to insist that Ruby make an effort to see it her way when Ruby says an item isn’t green. (That’s what Jade cares about.)

If the two (perversely) succeed at seeing things the other’s way, they would end up converging on believing that the sequence of objects is 20% green and 20% red (rather than the 0% green and 0% red that it actually is). They’d be happier, but they would also be wrong. In order for the pair to get the correct answer, then without loss of generality, when Ruby says an object is red, Jade needs to stand her ground: “No, it’s not red; no, I don’t trust you and won’t see things your way; let’s break out the Pantone swatches.” But that doesn’t seem very “cooperative” or “trusting”.

At this point, a proponent of the high-trust, high-cooperation dynamics that Sabien champions is likely to object that the absurd “20% green, 20% red” mutual-sycophancy outcome in this toy model is clearly not what they meant. (As Sabien takes pains to clarify in “Basics of Rationalist Discourse”, “If two people disagree, it’s tempting for them to attempt to converge with each other, but in fact the right move is for both of them to try to see more of what’s true.”)

Obviously, the mutual sycophancy outcome is clearly not what proponents of trust and cooperation consciously intend. The problem is that mutual sycophancy seems to be the natural outcome of treating interpersonal conflicts as analogous to epistemic disagreements and trying to resolve them both using cooperative practices, when in fact the decision-theoretic structure of those situations are very different. The text of “Truth or Dare” seems to treat the analogy as a strong one; it wouldn’t make sense to spend so many thousands of words discussing gift economies and the eponymous party game and then draw a conclusion about “what constitutes good conduct and productive discourse”, if gift economies and the party game weren’t relevant to what constitutes productive discourse.

“Truth or Dare” seems to suggest that it’s possible to escape the Dark World by excluding the bad guys. “[F]rom the perspective of someone with light world privilege, […] it did not occur to me that you might be hanging around someone with ill intent at all,” Sabien imagines a denizen of the light world saying. “Can you, um. Leave? Send them away? Not be spending time in the vicinity of known or suspected malefactors?”

If we’re talking about holding my associates to a standard of ideal truthseeking (as contrasted to a lower standard of “not using this truth-or-dare game to blackmail me”), then, no, I think I’m stuck spending time in the vicinity of people who are known or suspected to be biased. I can try to mitigate the problem by choosing less biased friends, but when we do disagree, I have no choice but to approach that using the same rules of reasoning that I would use with a possibly-mislabeled barometer, which do not have a particularly cooperative character. Telling us that the right move is for both of us to try to see more of what’s true is tautologically correct but non-actionable; I don’t know how to do that except by my usual methodology, which Sabien has criticized as characteristic of living in a dark world.

That is to say: I do not understand how high-trust, high-cooperation dynamics work. I’ve never seen them. They are utterly outside my experience and beyond my comprehension. What I do know is how to keep my footing in a world of people with different goals from me, which I try to do with what skill and tenacity I can manage.

And if someone should say that I should not be trusted when I try to explain what constitutes good conduct and productive discourse … well, I agree!

I don’t want people to trust me, because I think trust would result in us getting the wrong answer.

I want people to read the words I write, think it through for themselves, and let me know in the comments if I got something wrong.