Category Archives: philosophy

"Maybe Lying Doesn't Exist"

An Intuition on the Bayes-Structural Justification for Free Speech Norms

We can metaphorically (but like, hopefully it's a good metaphor) think of speech as being the sum of a positive-sum information-conveying component and a zero-sum social-control/memetic-warfare component. Coalitions of agents that allow their members to convey information amongst themselves will tend to outcompete coalitions that don't, because it's better for the coalition to be able to use all of the information it has.

Therefore, if we want the human species to better approximate a coalition of agents who act in accordance with the game-theoretic Bayes-structure of the universe, we want social norms that reward or at least not-punish information-conveying speech (so that other members of the coalition can learn from it if it's useful to them, and otherwise ignore it).

It's tempting to think that we should want social norms that punish the social-control/memetic-warfare component of speech, thereby reducing internal conflict within the coalition and forcing people's speech to mostly consist of information. This might be a good idea if the rules for punishing the social-control/memetic-warfare component are very clear and specific (e.g., no personal insults during a discussion about something that's not the person you want to insult), but it's alarmingly easy to get this wrong: you think you can punish generalized hate speech without any negative consequences, but you probably won't notice when members of the coalition begin to slowly gerrymander the hate speech category boundary in the service of their own values. Whoops!

Your Periodic Reminder I

Aumann's agreement theorem should not be naïvely misinterpreted to mean that humans should directly try to agree with each other. Your fellow rationalists are merely subsets of reality that may or may not exhibit interesting correlations with other subsets of reality; you don't need to "agree" with them any more than you need to "agree" with an encyclopædia, photograph, pinecone, or rock.

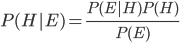

The Fundamental Theorem of Epistemology

(more commonly known as Bayes's theorem, but I like my name better)

Measure

In a sufficiently large universe, everything that can happen happens somewhere, but it's clearly not an even distribution. Flip a quantum coin a hundred times, and there have to be some versions of you who see a hundred heads, but they're so vastly outnumbered by versions of you who see a properly random-looking sequence of heads and tails that it's not worth thinking about: it mostly doesn't happen.

When you write a computer program, or build a bridge, or just think something, we might prefer to take the viewpoint that you're not creating anything so much as you are instantiating that pattern locally, thereby increasing its measure in the multiverse: there might be other ways for that program, that bridge, that thought to come about somewhere, but it's getting some of its support from you.

Decisionmaking is about exerting some control over the distribution of measure over patterns in the multiverse: agent-like patterns select actions so as to allocate measure to their preferred patterns. Maybe there are some versions of me with an ice-cream cone that form by chance, or are deliberately created by alien civilizations investigating how humans respond to ice-cream, but if I get an ice-cream cone, it's mostly because humans evolved and then developed cultures which domesticated dairy cows and cultivated sugar and so on and so forth until eventually I was born and grew up and put effort into acquiring ice-cream. When you decide, you help determine the distribution of what happens to the sections of the multiverse that depend on copies of your decision—choose carefully.

The Demandingness Objection

"Well, I'm not giving up dairy, but I can probably give up meat, and milk is at the very bottom of Brian's table of suffering per kilogram demanded, so I'd be contributing to much less evil than I was before. That's good, right?

"For all the unimaginably terrible things our species do to each other and to other creatures, we're not—we're probably not any worse than the rest of nature. Gazelles suffer terribly as lions eat them alive, but we can't intervene because then the lions would starve, and the gazelles would have a population explosion and starve, too. We have this glorious idea that people need to consent before sex, but male ducks just rape the females, and there's no one to stop it—nothing else besides humans around capable of formulating the proposition, as a proposition, that the torment and horror in the world is wrong and should stop. Animals have been eating each other for hundreds of millions of years; we may be murderous, predatory apes, but we're murderous, predatory apes with Reason—well, sort of—and a care/harm moral foundation that lets some of us, with proper training, to at least wish to be something better.

"I don't actually know much history or biology, but I know enough to want it to not be real, to not have happened that way. But it couldn't have been otherwise. In the absence of an ontologically fundamental creator God, Darwinian evolution is the only way to get purpose from nowhere, design without a designer. My wish for things to have been otherwise ... probably isn't even coherent; any wish for the nature of reality to have been different, can only be made from within reality.

Relativity

"Empathy hurts.

"I'm grateful for being fantastically, unimaginably rich by world-historical standards—and I'm terrified of it being taken away. I feel bad for all the creatures in the past—and future?—who are stuck in a miserable Malthusian equilibrium.

"I simultaneously want to extend my circle of concern out to all sentient life, while personally feeling fear and revulsion towards anything slightly different from what I'm used to.

"Anna keeps telling me I have a skewed perspective on what constitutes a life worth living. I'm inclined to think that animals and poor people have a wretched not-worth-living existence, but perhaps they don't feel so sorry for themselves?—for the same reason that hypothetical transhumans might think my life has been wretched and not worth living, even while I think it's been pretty good on balance.

"But I'm haunted. After my recent ordeal in the psych ward, the part of me that talks described it as 'hellish.' But I was physically safe the entire time. If something so gentle as losing one night of sleep and being taken away from my usual environment was enough to get me to use the h-word, then what about all the actual suffering in the world? What hope is there for transhumanism, if the slightest perturbation sends us spiraling off into madness?

"The other week I was reading Julian Simon's book on overcoming depression; he wrote that depression arises from negative self-comparisons: comparing your current state to some hypothetical more positive state. But personal identity can't actually exist; time can't actually exist the way we think it does. If pain and suffering are bad when they're implemented in my skull, then they have to be bad when implemented elsewhere.

"Anna said that evolutionarily speaking, bad experiences are more intense than good ones because you can lose all your fitness in a short time period. But if 'the brain can't multiply' is a bias—if two bad things are twice as bad as one, no matter where they are in space and time, even if no one is capable of thinking that way—then so is 'the brain can't integrate': long periods of feeling pretty okay count for something, too.

"I'm not a negative utilitarian; I'm a preference utilitarian. I'm not a preference utilitarian; I'm a talking monkey with delusions of grandeur."

I Don't Understand Time

Our subjective experience would have it that time "moves forward": the past is no longer, and the future is indeterminate and "hasn't happened yet." But it can't actually work that way: special relativity tells us that there's no absolute space of simultaneity; given two spacelike separated events, whether one happened "before" or "after" the other depends on where you are and how fast you're going. This leads us to a "block universe" view: our 3+1 dimensional universe, past, present, and future, simply exists, and the subjective arrow of time somehow arises from our perspective embedded within it.

Without knowing much in the way of physics or cognitive science myself, I can only wonder if there aren't still more confusions to dissolved, intuitions to be unlearned in the service of a more accurate understanding. We know things about the past from our memories and by observing documents; we might then say that memories and documents are forms of probabilistic evidence about another point in spacetime. But predictions about the future are also a form of probabilistic evidence about another point in spacetime. There's a sort of symmetry there, isn't there? Could we perhaps imagine that minds constructed differently from our own wouldn't perceive the same kind of arrow of time that we do?

The Horror of Naturalism

There's this deeply uncomfortable tension between being an animal physiologically incapable of caring about anything other than what happens to me in the near future, and the knowledge of the terrifying symmetry that cannot be unseen: that my own suffering can't literally be more important, just because it's mine. You do some philosophy and decide that your sphere of moral concern should properly extend to all sentient life—whatever sentient turns out to mean—but life is built to survive at the expense of other life.

I want to say, "Why can't everyone just get along and be nice?"—but those are just English words that only make sense to other humans from my native culture, who share the cognitive machinery that generated them. The real world is made out of physics and game theory; my entire concept of "getting along and being nice" is the extremely specific, contingent result of the pattern of cooperation and conflict in my causal past: the billions of corpses on the way to Homo sapiens, the thousands of years of culture on the way to the early twenty-first century United States, the nonshared environmental noise on the way to me. Even if another animal would agree that pleasure is better than pain and peace is better than war, the real world has implementation details that we won't agree on, and the implementation details have to be settled somehow.

I console myself with the concept of decision-theoretic irrelevance: insofar as we construe the function of thought as to select actions, being upset about things that you can't affect is a waste of cognition. It doesn't help anyone for me to be upset about all the suffering in the world when I don't know how to alleviate it. Even in the face of moral and ontological uncertainty, there are still plenty of things-worth-doing. I will play positive-sum games, acquire skills, acquire resources, and use the resources to protect some of the things I care about, making the world slightly less terrible with me than without me. And if I'm left with the lingering intuition that there was supposed to be something else, some grand ideal more important than friendship and Pareto improvements ... I don't remember it anymore.

Continuum Utilitarianism

You hear people talk about positive (maximize pleasure) versus negative (minimize pain) utilitarianism, or average versus total utilitarianism, none of which seem very satisfactory. For example, average utilitarianism taken literally would suggest killing everyone but the happiest person, and total utilitarianism implies what Derek Parfit called the repugnant conclusion: that for any possible world with lots of happy people, the total utilitarian must prefer another possible world with many more people whose lives are just barely worth living.

But really, it shouldn't be that surprising that there's no simple, intuitively satisfying population ethics, because any actual preference ordering over possible worlds is going to have to make tradeoffs: how much pleasure and how much pain distributed across how many people's lives in what manner, what counts as a "person," &c.

Actually Personal Responsibility

Dear reader, you occasionally hear people with conservative tendencies complain that the problem with Society today is that people lack personal responsibility: that the young and the poor need to take charge of themselves and stop mooching off their parents or the government: to shut up, do their homework, and get a job. I lack any sort of conservative tendency and would never say that sort of thing, but I would endorse a related-but-quite-distinct concept that I want to refer to using the same phrase personal responsibility, as long as it's clear from context that I don't mean it in the traditional, conservative way.

The problem with the traditional sense of personal responsibility is that it's not personal; it's an attempt to shame people into doing what the extant social order expects of them. I'm aware that that kind of social pressure often does serve useful purposes—but I think it's possible to do better. The local authorities really don't know everything; the moral rules and social norms you were raised with can actually be mistaken in all sorts of disastrous ways that no one warned you about. So I think people should strive to take personal responsibility for their own affairs not as a burdensome duty to Society, but because it will actually result in better outcomes, both for the individual in question, and for Society.

Mathematics Is the Subfield of Philosophy That Humans Are Good At

By philosophy I understand the discipline of discovering truths about reality by means of thinking very carefully. Contrast to science, where we try to come up with theories that predict our observations. Philosophers of number have observed that the first ten trillion nontrivial zeros of the Riemann zeta function are on the critical line, but people don't speak of the Riemann hypothesis as being almost certainly true, not necessarily because they anticipate a counterexample lurking somewhere above ½ + 1026i (although "large" counterexamples are not unheard-of in the philosophy of numbers), but rather because while empirical examination is certainly helpful, it's not really what we do. Mere empiricism is usually sufficient for knowing (with high probability) what is true, but as philosophers, we want to explain why, and moreover, why it could not have been otherwise.

When we try this on topics like numbers or shapes, it works really, really well: our philosophers quickly reach ironclad consensuses about matters far removed from human intuition. When we try it on topics like justice or existence ... it doesn't work so well. I think it's sad.