A Surprising Development in the Study of Multi-layer Parameterized Graphical Function Approximators

As a programmer and epistemology enthusiast, I’ve been studying some statistical modeling techniques lately! It’s been boodles of fun, and might even prove useful in a future dayjob if I decide to pivot my career away from the backend web development roles I’ve taken in the past.

More specifically, I’ve mostly been focused on multi-layer parameterized graphical function approximators, which map inputs to outputs via a sequence of affine transformations composed with nonlinear “activation” functions.

(Some authors call these “deep neural networks” for some reason, but I like my name better.)

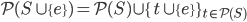

It’s a curve-fitting technique: by setting the multiplicative factors and additive terms appropriately, multi-layer parameterized graphical function approximators can approximate any function. For a popular choice of “activation” rule which takes the maximum of the input and zero, the curve is specifically a piecewise-linear function. We iteratively improve the approximation f(x, θ) by adjusting the parameters θ in the direction of the derivative of some error metric on the current approximation’s fit to some example input–output pairs (x, y), which some authors call “gradient descent” for some reason. (The mean squared error (f(x, θ) − y)² is a popular choice for the error metric, as is the negative log likelihood −log P(y | f(x, θ)). Some authors call these “loss functions” for some reason.)

Basically, the big empirical surprise of the previous decade is that given a lot of desired input–output pairs (x, y) and the proper engineering know-how, you can use large amounts of computing power to find parameters θ to fit a function approximator that “generalizes” well—meaning that if you compute ŷ = f(x, θ) for some x that wasn’t in any of your original example input–output pairs (which some authors call “training” data for some reason), it turns out that ŷ is usually pretty similar to the y you would have used in an example (x, y) pair.

It wasn’t obvious beforehand that this would work! You’d expect that if your function approximator has more parameters than you have example input–output pairs, it would overfit, implementing a complicated function that reproduced the example input–output pairs but outputted crazy nonsense for other choices of x—the more expressive function approximator proving useless for the lack of evidence to pin down the correct approximation.

And that is what we see for function approximators with only slightly more parameters than example input–output pairs, but for sufficiently large function approximators, the trend reverses and “generalization” improves—the more expressive function approximator proving useful after all, as it admits algorithmically simpler functions that fit the example pairs.

The other week I was talking about this to an acquaintance who seemed puzzled by my explanation. “What are the preconditions for this intuition about neural networks as function approximators?” they asked. (I paraphrase only slightly.) “I would assume this is true under specific conditions,” they continued, “but I don’t think we should expect such niceness to hold under capability increases. Why should we expect this to carry forward?”

I don’t know where this person was getting their information, but this made zero sense to me. I mean, okay, when you increase the number of parameters in your function approximator, it gets better at representing more complicated functions, which I guess you could describe as “capability increases”?

But multi-layer parameterized graphical function approximators created by iteratively using the derivative of some error metric to improve the quality of the approximation are still, actually, function approximators. Piecewise-linear functions are still piecewise-linear functions even when there are a lot of pieces. What did you think it was doing?

Multi-layer Parameterized Graphical Function Approximators Have Many Exciting Applications

To be clear, you can do a lot with function approximation!

For example, if you assemble a collection of desired input–output pairs (x, y) where the x is an array of pixels depicting a handwritten digit and y is a character representing which digit, then you can fit a “convolutional” multi-layer parameterized graphical function approximator to approximate the function from pixel-arrays to digits—effectively allowing computers to read handwriting.

Such techniques have proven useful in all sorts of domains where a task can be conceptualized as a function from one data distribution to another: image synthesis, voice recognition, recommender systems—you name it. Famously, by approximating the next-token function in tokenized internet text, large language models can answer questions, write code, and perform other natural-language understanding tasks.

I could see how someone reading about computer systems performing cognitive tasks previously thought to require intelligence might be alarmed—and become further alarmed when reading that these systems are “trained” rather than coded in the manner of traditional computer programs. The summary evokes imagery of training a wild animal that might turn on us the moment it can seize power and reward itself rather than being dependent on its masters.

But “training” is just a suggestive name. It’s true that we don’t have a mechanistic understanding of how function approximators perform tasks, in contrast to traditional computer programs whose source code was written by a human. It’s plausible that this opacity represents grave risks, if we create powerful systems that we don’t know how to debug.

But whatever the real risks are, any hope of mitigating them is going to depend on acquiring the most accurate possible understanding of the problem. If the problem is itself largely one of our own lack of understanding, it helps to be specific about exactly which parts we do and don’t understand, rather than surrendering the entire field to a blurry aura of mystery and despair.

An Example of Applying Multi-layer Parameterized Graphical Function Approximators in Success-Antecedent Computation Boosting

One of the exciting things about multi-layer parameterized graphical function approximators is that they can be combined with other methods for the automation of cognitive tasks (which is usually called “computing”, but some authors say “artificial intelligence” for some reason).

In the spirit of being specific about exactly which parts we do and don’t understand, I want to talk about Mnih et al. 2013’s work on getting computers to play classic Atari games (like Pong, Breakout, or Space Invaders). This work is notable as one of the first high-profile examples of using multi-layer parameterized graphical function approximators in conjunction with success-antecedent computation boosting (which some authors call “reinforcement learning” for some reason).

If you only read the news—if you’re not in tune with there being things to read besides news—I could see this result being quite alarming. Digital brains learning to play video games at superhuman levels from the raw pixels, rather than because a programmer sat down to write an automation policy for that particular game? Are we not already in the shadow of the coming race?

But people who read textbooks and not just news, being no less impressed by the result, are often inclined to take a subtler lesson from any particular headline-grabbing advance.

Mnih et al.’s Atari result built off the technique of Q-learning introduced two decades prior. Given a discrete-time present-state-based outcome-valued stochastic control problem (which some authors call a “Markov decision process” for some reason), Q-learning concerns itself with defining a function Q(s, a) that describes the value of taking action a while in state s, for some discrete sets of states and actions. For example, to describe the problem faced by an policy for a grid-based video game, the states might be the squares of the grid, and the available actions might be moving left, right, up, or down. The Q-value for being on a particular square and taking the move-right action might be the expected change in the game’s score from doing that (including a scaled-down expectation of score changes from future actions after that).

Upon finding itself in a particular state s, a Q-learning policy will usually perform the action with the highest Q(s, a), “exploiting” its current beliefs about the environment, but with some probability it will “explore” by taking a random action. The predicted outcomes of its decisions are compared to the actual outcomes to update the function Q(s, a), which can simply be represented as a table with as many rows as there are possible states and as many columns as there are possible actions. We have theorems to the effect that as the policy thoroughly explores the environment, it will eventually converge on the correct Q(s, a).

But Q-learning as originally conceived doesn’t work for the Atari games studied by Mnih et al., because it assumes a discrete set of possible states that could be represented with the rows in a table. This is intractable for problems where the state of the environment varies continuously. If a “state” in Pong is a 6-tuple of floating-point numbers representing the player’s paddle position, the opponent’s paddle position, and the x- and y-coordinates of the ball’s position and velocity, then there’s no way for the traditional Q-learning algorithm to base its behavior on its past experiences without having already seen that exact conjunction of paddle positions, ball position, and ball velocity, which almost never happens. So Mnih et al.’s great innovation was—

(Wait for it …)

—to replace the table representing Q(s, a) with a multi-layer parameterized graphical function approximator! By approximating the mapping from state–action pairs to discounted-sums-of-“rewards”, the “neural network” allows the policy to “generalize” from its experience, taking similar actions in relevantly similar states, without having visited those exact states before. There are a few other minor technical details needed to make it work well, but that’s the big idea.

And understanding the big idea probably changes your perspective on the headline-grabbing advance. (It certainly did for me.) “Deep learning is like evolving brains; it solves problems and we don’t know how” is an importantly different story from “We swapped out a table for a multi-layer parameterized graphical function approximator in this specific success-antecedent computation boosting algorithm, and now it can handle continuous state spaces.”

Risks From Learned Approximation

When I solicited reading recommendations from people who ought to know about risks of harm from statistical modeling techniques, I was directed to a list of reputedly fatal-to-humanity problems, or “lethalities”.

Unfortunately, I don’t think I’m qualified to evaluate the list as a whole; I would seem to lack some necessary context. (The author keeps using the term “AGI” without defining it, and adjusted gross income doesn’t make sense in context.)

What I can say is that when the list discusses the kinds of statistical modeling techniques I’ve been studying lately, it starts to talk funny. I don’t think someone who’s been reading the same textbooks as I have (like Prince 2023 or Bishop and Bishop 2024) would write like this:

Even if you train really hard on an exact loss function, that doesn’t thereby create an explicit internal representation of the loss function inside an AI that then continues to pursue that exact loss function in distribution-shifted environments. Humans don’t explicitly pursue inclusive genetic fitness; outer optimization even on a very exact, very simple loss function doesn’t produce inner optimization in that direction. […] This is sufficient on its own […] to trash entire categories of naive alignment proposals which assume that if you optimize a bunch on a loss function calculated using some simple concept, you get perfect inner alignment on that concept.

To be clear, I agree that if you fit a function approximator by iteratively adjusting its parameters in the direction of the derivative of some loss function on example input–output pairs, that doesn’t create an explicit internal representation of the loss function inside the function approximator.

It’s just—why would you want that? And really, what would that even mean? If I use the mean squared error loss function to approximate a set of data points in the plane with a line (which some authors call a “linear regression model” for some reason), obviously the line itself does not somehow contain a representation of general squared-error-minimization. The line is just a line. The loss function defines how my choice of line responds to the data I’m trying to approximate with the line. (The mean squared error has some elegant mathematical properties, but is more sensitive to outliers than the mean absolute error.)

It’s the same thing for piecewise-linear functions defined by multi-layer parameterized graphical function approximators: the model is the dataset. It’s just not meaningful to talk about what a loss function implies, independently of the training data. (Mean squared error of what? Negative log likelihood of what? Finish the sentence!)

This confusion about loss functions seems to be linked to a particular theory of how statistical modeling techniques might be dangerous, in which “outer” training results in the emergence of an “inner” intelligent agent. If you expect that, and you expect intelligent agents to have a “utility function”, you might be inclined to think of “gradient descent” “training” as trying to transfer an outer “loss function” into an inner “utility function”, and perhaps to think that the attempted transfer primarily doesn’t work because “gradient descent” is an insufficiently powerful optimization method.

I guess the emergence of inner agents might be possible? I can’t rule it out. (“Functions” are very general, so I can’t claim that a function approximator could never implement an agent.) Maybe it would happen at some scale?

But taking the technology in front of us at face value, that’s not my default guess at how the machine intelligence transition would go down. If I had to guess, I’d imagine someone deliberately building an agent using function approximators as a critical component, rather than your function approximator secretly having an agent inside of it.

That’s a different threat model! If you’re trying to build a good agent, or trying to prohibit people from building bad agents using coordinated violence (which some authors call “regulation” for some reason), it matters what your threat model is!

(Statistical modeling engineer Jack Gallagher has described his experience of this debate as “like trying to discuss crash test methodology with people who insist that the wheels must be made of little cars, because how else would they move forward like a car does?”)

I don’t know how to build a general agent, but contemporary computing research offers clues as to how function approximators can be composed with other components to build systems that perform cognitive tasks.

Consider AlphaGo and its successor AlphaZero. In AlphaGo, one function approximator is used to approximate a function from board states to move probabilities. Another is used to approximate the function from board states to game outcomes, where the outcome is +1 when one player has certainly won, −1 when the other player has certainly won, and a proportionately intermediate value indicating who has the advantage when the outcome is still uncertain. The system plays both sides of a game, using the board-state-to-move-probability function and board-state-to-game-outcome function as heuristics to guide a search algorithm which some authors call “Monte Carlo tree search”. The board-state-to-move-probability function approximation is improved by adjusting its parameters in the direction of the derivative of its cross-entropy with the move distribution found by the search algorithm. The board-state-to-game-outcome function approximation is improved by adjusting its parameters in the direction of the derivative of its squared difference with the self-play game’s ultimate outcome.

This kind of design is not trivially safe. A similarly superhuman system that operated in the real world (instead of the restricted world of board games) that iteratively improved an action-to-money-in-this-bank-account function seems like it would have undesirable consequences, because if the search discovered that theft or fraud increased the amount of money in the bank account, then the action-to-money function approximator would generalizably steer the system into doing more theft and fraud.

Statistical modeling engineers have a saying: if you’re surprised by what your nerual net is doing, you haven’t looked at your training data closely enough. The problem in this hypothetical scenario is not that multi-layer parameterized graphical function approximators are inherently unpredictable, or must necessarily contain a power-seeking consequentialist agent in order to do any useful cognitive work. The problem is that you’re approximating the wrong function and get what you measure. The failure would still occur if the function approximator “generalizes” from its “training” data the way you’d expect. (If you can recognize fraud and theft, it’s easy enough to just not use that data as examples to approximate, but by hypothesis, this system is only looking at the account balance.) This doesn’t itself rule out more careful designs that use function approximators to approximate known-trustworthy processes and don’t search harder than their representation of value can support.

This may be cold comfort to people who anticipate a competitive future in which cognitive automation designs that more carefully respect human values will foreseeably fail to keep up with the frontier of more powerful systems that do search harder. It may not matter to the long-run future of the universe that you can build helpful and harmless language agents today, if your civilization gets eaten by more powerful and unfriendlier cognitive automation designs some number of years down the line. As a humble programmer and epistemology enthusiast, I have no assurances to offer, no principle or theory to guarantee everything will turn out all right in the end. Just a conviction that, whatever challenges confront us in the future, we’ll be a better position to face them by understanding the problem in as much detail as possible.

Bibliography

Bishop, Christopher M., and Andrew M. Bishop. 2024. Deep Learning: Foundations and Concepts. Cambridge, UK: Cambridge University Press. https://www.bishopbook.com/

Mnih, Volodymyr, Koray Kavukcuoglu, David Silver, Alex Graves, Ioannis Antonoglou, Daan Wierstra, and Martin Riedmiller. 2013. “Playing Atari with Deep Reinforcement Learning.” https://arxiv.org/abs/1312.5602

Prince, Simon J.D. 2023. Understanding Deep Learning. Cambridge, MA: MIT Press. http://udlbook.com

Sutton, Richard S. and Andrew G. Barto. 2024. Reinforcement Learning. 2nd ed. Cambridge, MA: MIT Press.