Previously, I wrote about how I was considering going back to San Francisco State University for two semesters to finish up my Bachelor’s degree in math.

So, I did that. I think it was a good decision! I got more out of it than I expected.

To be clear, “better than I expected” is not an endorsement of college. SF State is still the same communist dystopia I remember from a dozen years ago—a bureaucratic command economy dripping in propaganda about how indispensible and humanitarian it is, whose subjects’ souls have withered to the point where, even if they don’t quite believe the propaganda, they can’t conceive of life and work outside the system.

But it didn’t hurt this time, because I had a sense of humor about it now—and a sense of perspective (thanks to life experience, no thanks to school). Ultimately, policy debates should not appear one-sided: if things are terrible, it’s probably not because people are choosing the straightforwardly terrible thing for no reason whatsoever, with no trade-offs, coordination problems, or nonobvious truths making the terrible thing look better than it is. The thing that makes life under communism unbearable is the fact that you can’t leave. Having escaped, and coming back as a visiting dignitary, one is a better position to make sense of how and why the regime functions—the problems it solves, at whatever cost in human lives or dignity—the forces that make it stable if not good.

Doing It Right This Time (Math)

The undergraduate mathematics program at SFSU has three tracks: for “advanced studies”, for teaching, and for liberal arts. My student record from 2013 was still listed as on the advanced studies track. In order to graduate as quickly as possible, I switched to the liberal arts track, which, beyond a set of “core” courses, only requires five electives numbered 300 or higher. The only core course I hadn’t completed was “Modern Algebra I”, and I had done two electives in Fall 2012 (“Mathematical Optimization” and “Probability and Statistics I”), so I only had four math courses (including “Modern Algebra I”) to complete for the major.

“Real Analysis II” (Fall 2024)

My last class at SF State in Spring 2013 (before getting rescued by the software industry) had been “Real Analysis I” with Prof. Alex Schuster. I regret that I wasn’t in a state to properly focus and savor it at the time: I had a pretty bad sleep-deprivation-induced psychotic break in early February 2013 and for a few months thereafter was mostly just trying to hold myself together. I withdrew from my other classes (“Introduction to Functions of a Complex Variable” and “Urban Issues of Black Children and Youth”) and ended up getting a B−.

My psychiatric impairment that semester was particularly disappointing because I had been looking forward to “Real Analysis I” as my first “serious” math class, being concerned with proving theorems rather than the “school-math” that most people associate with the subject, of applying given techniques to given problem classes. I had wanted to take it concurrently with the prerequsite, “Exploration and Proof” (which I didn’t consider sufficiently “serious”) upon transferring to SFSU the previous semester, but was not permitted to. I had emailed Prof. Schuster asking to be allowed to enroll, with evidence that I was ready (attaching a PDF of a small result I had proved about analogues of π under the p-norm, and including the contact email of Prof. Robert Hasner of Diablo Valley College, who had been my “Calculus III” professor and had agreed to vouch for my preparedness), but he didn’t reply.

Coming back eleven years later, I was eager to make up for that disappointment by picking up where I left off in “Real Analysis II” with the same Prof. Schuster. On the first day on instruction, I wore a collared shirt and tie (and mask, having contracted COVID-19 while traveling the previous week) and came to classroom early to make a point of marking my territory, using the whiteboard to write out the first part of a proof of the multivariate chain rule that I was working through in Bernd S. W. Schröder’s Mathematical Analysis: A Concise Introduction—my favorite analysis textbook, which I had discovered in the SFSU library in 2012 and subsequently bought my own copy. (I would soon check up on the withdrawal stamp sheet in the front of the library’s copy. No one had checked it out in the intervening twelve years.)

The University Bulletin officially titled the course “Real Analysis II: Several Variables”, so you’d expect that getting a leg up on the multidimensional chain rule would be studying ahead for the course, but it turned out that the Bulletin was lying relative to the syllabus that Prof. Schuster had emailed out the week before: we would be covering series, series of functions, and metric space topology. Fine. (I was already pretty familiar with metric space topology, but even my “non-epsilon” calculus-level knowledge of series was weak; to me, the topic stunk of school.)

“Real II” was an intimate class that semester, befitting the SFSU’s status as a garbage-tier institution: there were only seven or eight students enrolled. It was one of many classes in the department that were cross-listed as both a graduate (“MATH 770”) and upper-division undergraduate course (“MATH 470”). I was the only student enrolled in 470. The university website hosted an old syllabus from 2008 which said that the graduate students would additionally write a paper on an approved topic, but that wasn’t a thing the way Prof. Schuster was teaching the course. Partway through the semester, I was added to Canvas (the online course management system) for the 770 class, to save Prof. Schuster and the TA the hassle of maintaining both.

The textbook was An Introduction to Analysis (4th edition) by William R. Wade, the same book that had been used for “Real I” in Spring 2013. It felt in bad taste for reasons that are hard to precisely articulate. I want to say the tone is patronizing, but don’t feel like I could defend that judgement in debate against someone who doesn’t share it. What I love about Schröder is how it tries to simultaneously be friendly to the novice (the early chapters sprinkling analysis tips and tricks as numbered “Standard Proof Techniques” among the numbered theorems and definitions) while also showcasing the fearsome technicality of the topic in excruciatingly detailed estimates (proofs involving chains of inequalities, typically ending on “< ε”). In contrast, Wade often feels like it’s hiding something from children who are now in fact teenagers.

The assignments were a lot of work, but that was good. It was what I was there for—to prove that I could do the work. I could do most of the proofs with some effort. At SFSU in 2012–2013, I remembered submitting paper homework, but now, everything was uploaded to Canvas. I did all my writeups in LyX, a GUI editor for LaTeX.

One thing that had changed very recently, not about SFSU, but about the world, was the availability of large language models, which had in the GPT-4 era become good enough to be useful tutors on standard undergrad material. They definitely weren’t totally reliable, but human tutors aren’t always reliable, either. I adopted the policy that I was allowed to consult LLMs for a hint when I got stuck on homework assignments, citing the fact that I had gotten help in my writeup. Prof. Schuster didn’t object when I inquired about the propriety of this at office hours. (I also cited office-hours hints in my writeups.)

Prof. Schuster held his office hours in the math department conference room rather than his office, which created a nice environment for multiple people to work or socialize, in addition to asking Prof. Schuster questions. I came almost every time, whether or not I had an analysis question for Prof. Schuster. Often there were other students from “Real II” or Prof. Schuster’s “Real I” class there, or a lecturer who also enjoyed the environment, but sometimes it was just me.

Office hours chatter didn’t confine itself to math. Prof. Schuster sometimes wore a Free Palestine bracelet. I asked him what I should read to understand the pro-Palestinian position, which had been neglected in my Jewish upbringing. He recommended Rashid Kalidi’s The Hundred Years’ War on Palestine, which I read and found informative (in contrast to the student pro-Palestine demonstrators on campus, whom I found anti-persuasive).

I got along fine with the other students but do not seem to have formed any lasting friendships. The culture of school didn’t feel quite as bad as I remembered. It’s unclear to me how much of this is due to my memory having stored a hostile caricature, and how much is due to my being less sensitive to it this time. When I was at SFSU a dozen years ago, I remember seething with hatred at how everyone talked about their studies in terms of classes and teachers and grades, rather than about the subject matter in itself. There was still a lot of that—bad enough that I complained about it at every opportunity—but I wasn’t seething with hatred anymore, as if I had come to terms with it as mere dysfunction and not sacrilege. I only cried while complaining about it a couple times.

One of my signature gripes was about the way people in the department habitually refered to courses by number rather than title, which felt like something out of a dystopian YA novel. A course title like “Real Analysis II” at least communicates that the students are working on real analysis, even if the opaque “II” doesn’t expose which real-analytic topics are covered. In contrast, a course number like “MATH 770” doesn’t mean anything outside of SFSU’s bureaucracy. It isn’t how people would talk if they believed there was a subject matter worth knowing about except insofar as the customs of bureaucratic servitude demanded it.

There were two examinations: a midterm, and the final. Each involved stating some definitions, identifying some propositions as true or false with a brief justification, and writing two or three proofs. A reference sheet was allowed, which made the definitions portion somewhat farcical as a test of anything more than having bothered to prepare a reference sheet. (I objected to Prof. Schuster calling it a “cheat sheet.” Since he was allowing it, it’s wasn’t “cheating”!)

I did okay. I posted a 32.5/40 (81%) on the midterm. I’m embarrassed by my performance on the final. It looked easy, and I left the examination room an hour early after providing an answer to all the questions, only to realize a couple hours later that I had completely botched a compactness proof. Between that gaffe, the midterm, and my homework grades, I was expecting to end up with a B+ in the course. (How mortifying—to have gone back to school almost specifically for this course and then not even get an A.) But when the grades came in, it ended up being an A: Prof. Schuster only knocked off 6 points for the bogus proof, for a final exam grade of 44/50 (88%), and had a policy of discarding the midterm grade when the final exam grade was higher. It still seemed to me that that should have probably worked out to an A− rather than an A, but it wasn’t my job to worry about that.

“Probability Models” (Fall 2024)

In addition to the rarified math-math of analysis, the practical math of probability seemed like a good choice for making the most of my elective credits at the university, so I also enrolled in Prof. Anandamayee Mujamdar’s “Probability Models” for the Fall 2024 semester. The prerequisites were linear algebra, “Probability and Statistics I”, and “Calculus III”, but the registration webapp hadn’t allowed me to enroll, presumably because it didn’t believe I knew linear algebra. (The linear algebra requirement at SFSU was four units. My 2007 linear algebra class from UC Santa Cruz, which was on a quarter system, got translated to 3.3 semester units.) Prof. Mujamdar hadn’t replied to my July email requesting a permission code, but got me the code after telling me to send a followup email after I inquired in person at the end of the first class.

(I had also considered taking the online-only “Introduction to Linear Models”, which had the same prerequisites, but Prof. Mohammad Kafai also hadn’t replied to my July email, and I didn’t bother following up, which was just as well: the semester ended up feeling busy enough with just the real analysis, probability models, my gen-ed puff course, and maintaining my soul in an environment that assumes people need a bureaucratic control structure in order to keep busy.)

Like “Real II”, “Probability Models” was also administratively cross-listed as both a graduate (“MATH 742”, “Advanced Probability Models”) and upper-division undergraduate course (“MATH 442”), despite no difference whatsoever in the work required of graduate and undergraduate students. After some weeks of reviewing the basics of random variables and conditional expectation, the course covered Markov chains and the Poisson process.

The textbook was Introduction to Probability Models (12th edition) by Sheldon M. Ross, which, like Wade, felt in bad taste for reasons that were hard to put my finger on. Lectures were punctuated with recitation days on which we took a brief quiz and then did exercises from a worksheet for the rest of the class period. There was more content to cover than the class meeting schedule could accomodate, so there were also video lectures on Canvas, which I mostly did not watch. (I attended class because it was a social expectation and because attendance was 10% of the grade, but I preferred to learn from the book. As long as I was completing the assignments, that shouldn’t be a problem … right?)

In contrast to what I considered serious math, the course was very much school-math about applying particular techniques to solve particular problem classes, taken to the parodic extent of quizzes and tests re-using worksheet problems verbatim. (You’d expect a statistics professor to know not to test on the training set!)

It was still a lot of work, which I knew needed to be taken seriously in order to do well in the course. The task of quiz #2 was to derive the moment-generating function of the exponential distribution. I had done that successfully on the recitation worksheet earlier, but apparently that and the homework hadn’t been enough practice, because I botched it on quiz day. After the quiz, Prof. Mujamdar wrote the correct derivation on the board. She had also said that we could re-submit a correction to our quiz for half-credit, but I found this policy confusing: it felt morally dubious that it should be possible to just copy down the solution from the board and hand that in, even for partial credit. (I guess the policy made sense from the perspective of schoolstudents needing to be nudged and manipulated with credit in order to do even essential things like trying to learn from one’s mistakes.) For my resubmission, I did the correct derivation at home in LyX, got it printed, and bought it to office hours the next class day. I resolved to be better prepared for future quizzes (to at least not botch them, minor errors aside) in order to avoid the indignity of having an incentive to resubmit.

I mostly succeeded at that. I would end up doing a resubmission for quiz #8, which was about how to sample from an exponential distribution (with λ=1) given the ability to sample from the uniform distribution on [0,1], by inverting the exponential’s cumulative distribution function. (It had been covered in class, and I had gotten plenty of practice on that week’s assignments with importance sampling using exponential proposal distributions, but I did it in Rust using the rand_distr library rather than what was apparently the intended method of implementing exponential sampling from a uniform RNG “from scratch”.) I blunted the indignity of my resubmission recapitulating the answer written on the board after the quiz by additionally inverting by myself the c.d.f. of a different distribution, the Pareto.

I continued my practice of using LLMs for hints when I got stuck on assignments, and citing the help in my writeup; Prof. Mujamdar seemed OK with it when I mentioned it at office hours. (I went to office hours occasionally, when I had a question for Prof. Mujamdar, who was kind and friendly to me, but it wasn’t a social occasion like Prof. Schuster’s conference-room office hours.)

I was apparently more conscientious than most students. Outside of class, the grad student who graded our assignments recommended that I make use of the text’s solutions manual (which was circulating in various places online) to check my work. Apparently, he had reason to suspect that some other students in the class were just copying from the solution manual, but was not given the authority to prosecute the matter when he raised the issue to the professor. He said that he felt bad marking me down for my mistakes when it was clear that I was trying to do the work.

The student quality seemed noticeably worse than “Real II”, at least along the dimensions that I was sensitive to. There was a memorable moment when Prof. Mujamdar asked which students were in undergrad. I raised my hand. “Really?” she said.

It was only late in the semester that I was alerted by non-course reading (specifically a footnote in the book by Daphne Koller and the other guy) that the stationary distribution of a Markov chain is an eigenvector of the transition matrix with eigenvalue 1. Taking this linear-algebraic view has interesting applications: for example, the mixing time of the chain is determined by the second-largest eigenvalue, because any starting distribution can be expressed in terms of an eigenbasis, and the coefficients of all but the stationary vector decay as you keep iterating (because all the other eigenvalues are less than 1).

The feeling of enlightenment was outweighed by embarrassment that I hadn’t independently noticed that the stationary distribution was an eigenvector (we had been subtracting 1 off the main diagonal and solving the system for weeks; the operation should have felt familiar), and, more than either of those, annoyance that neither the textbook nor the professor had deigned to mention this relevant fact in a course that had linear algebra as a prerequisite. When I tried to point it out during the final review session, it didn’t seem like Prof. Mujamdar had understood what I said—not for the lack of linear algebra knowledge, I’m sure—let alone any of the other students.

I can only speculate that the occurrence of a student pointing out something about mathematical reality that wasn’t on the test or syllabus was so unexpected, so beyond what everyone had been conditioned to think school was about, that no one had any context to make sense of it. A graduate statistics class at San Francisco State University just wasn’t that kind of space. I did get an A.

The 85th William Lowell Putnam Mathematical Competition

I also organized a team for the Putnam Competition, SFSU’s first in institutional memory. (I’m really proud of my recruitment advertisements to the math majors’ mailing list.) The story of the Putnam effort has been recounted in a separate post, “The End of the Movie: SF State’s 2024 Putnam Competition Team, A Retrospective”.

As the email headers at the top of the post indicate, the post was originally composed for the department mailing lists, but it never actually got published there: department chair Eric Hsu wrote to me that it was “much too long to send directly to the whole department” but asked for my “permission to eventually share it with the department, either as a link or possibly as a department web page.” (He cc’d a department office admin whom I had spoken to about posting the Putnam training session announcements on the mailing list; reading between the lines, I’m imagining that she was discomfited by the tone of the post and had appealed to Chair Hsu’s authority about whether to let it through.)

I assumed that the ask to share with the department “eventually” was polite bullshit on Hsu’s part to let me down gently. (Probably no one gets to be department chair without being molded into a master of polite bullshit.) Privately, I didn’t think the rationale made sense—it’s just as easy to delete a long unwanted mailing list message as a short one; the email server wasn’t going to run out of paper—but it seemed petty to argue. I replied that I hadn’t known the rules for the mailing list and that he should feel free to share or not as he saw fit.

“Measure and Integration” (Spring 2025)

I had a busy semester planned for Spring 2025, with two graduate-level (true graduate-level, not cross-listed) analysis courses plus three gen-ed courses that I needed to graduate. (Following Prof. Schuster, I’m humorously counting “Modern Algebra I” as a gen-ed course.) I only needed one upper-division undergrad math course other than “Modern Algebra I” to graduate, but while I was at the University for one more semester, I was intent on getting my money’s worth. I aspired to get a head start (ideally on all three math courses) over winter break and checked out a complex analysis book with exercise solutions from the library, but only ended up getting any traction on measure theory, doing some exercises from chapter 14 of Schröder, “Integration on Measure Spaces”.

Prof. Schuster was teaching “Measure and Integration” (“MATH 710”). It was less intimate than “Real II” the previous semester, with a number of students in the teens. The class met at 9:30 a.m. on Tuesdays and Thursdays, which I found inconveniently early in the morning given my hour-and-twenty-minute BART-and-bus commute. I was late the first day. After running into to the room, I put the printout of my exercises from Schröder on the instructor’s desk and said, “Homework.” Prof. Schuster looked surprised for a moment, then accepted it without a word.

The previous semester, Prof. Schuster said he was undecided between using Real Analysis by Royden and Measure, Integration, and Real Analysis by Sheldon Axler (of Linear Algebra Done Right fame, and also our former department chair at SFSU) as the textbook. He ended up going with Axler, which for once was in good taste. (Axler would guest-lecture one day when Prof. Schuster was absent. I got him to sign my copy of Linear Algebra Done Right.) We covered Lebesgue measure and the Lebesgue integral, then skipped over the chapter on product measures (which Prof. Schuster said was technical and not that interesting) in favor of starting on Banach spaces. (As with “Several Variables” the previous semester, Prof. Schuster did not feel beholden to making the Bulletin course titles not be lies; he admitted late in the semester that it might as well have been called “Real Analysis III”.)

I would frequently be a few minutes late throughout the semester. One day, the BART had trouble while my train was in downtown San Francisco, and it wasn’t clear when it would move again. I got off and summoned a Waymo driverless taxi to take me the rest of the way to the University. We were covering the Cantor set that day, and I rushed in with more than half the class period over. “Sorry, someone deleted the middle third of the train,” I said.

Measure theory was a test of faith which I’m not sure I passed. Everyone who reads Wikipedia knows about the notorious axiom of choice. This was the part of the school curriculum in which the axiom of choice becomes relevant. It impressed upon me that as much as I like analysis as an intellectual activity, I … don’t necessarily believe in this stuff? We go to all this work to define sigma-algebras in order to rule out pathological sets whose elements cannot be written down because they’re defined using the axiom of choice. You could argue that it’s not worse than uncountable sets, and that alternatives to classical mathematics just end up needing to bite different bullets. (In computable analysis, equality turns out to be uncomputable, because there’s no limit on how many decimal places you would need to check for a tiny difference between two almost-equal numbers. For related reasons, all computable functions are continuous.) But I’m not necessarily happy about the situation.

I did okay. I was late on some of the assignments (and didn’t entirely finish assignments #9 and #10), but the TA was late in grading them, too. I posted a 31/40 (77.5%) on the midterm. I was expecting to get around 80% on the final based on my previous performance on Prof. Schuster’s examinations, but I ended up posting a 48/50 (96%), locking in an A for the course.

“Theory of Functions of a Complex Variable” (Spring 2025)

My other graduate course was “Theory of Functions of a Complex Variable” (“MATH 730”), taught by Prof. Chun-Kit Lai. I loved the pretentious title and pronounced all seven words at every opportunity. (Everyone else, including Prof. Lai’s syllabus, said “complex analysis” when they didn’t say “730”.)

The content lived up to the pretension of the title. This was unambiguously the hardest school class I had ever taken. Not in the sense that Prof. Lai was particularly strict about grades or anything; on the contrary, he seemed charmingly easygoing about the institutional structure of school, while of course taking it for granted as an unquestioned background feature of existence. But he was pitching the material to a higher level than Prof. Schuster or Axler.

The textbook was Complex Analysis by Elias M. Stein and Rami Shakarchi, volume II in their “Princeton Lectures in Analysis” series. Stein and Shakarchi leave a lot to the reader (prototypically a Princeton student). It wasn’t to my taste—but this time, I knew the problem was on my end. My distaste for Wade and Ross had been a reflection of the ways in which I was spiritually superior to the generic SFSU student; my distaste for Stein and Shakarchi reflected the grim reality that I was right where I belonged.

I don’t think I was alone in finding the work difficult. Prof. Lai gave the entire class an extension to rebsubmit assignment #2 because the average performance had been so poor.

Prof. Lai didn’t object to my LLM hint usage policy when I inquired about it at office hours. I still felt bad about how much external help I needed just to get through the assignments. The fact that I footnoted everything meant that I wasn’t being dishonest. (In his feedback on my assignment #7, Prof. Lai wrote to me, “I like your footnote. Very genuine and is a modern way of learning math.”) It still felt humiliating to turn in work with so many footnotes: “Thanks to OpenAI o3-mini-high for hints”, “Thanks to Claude Sonnet 3.7 for guidance”, “Thanks to [classmate’s name] for this insight”, “Thanks to the”Harmonic Conjugate" Wikipedia article“,”This is pointed out in Tristan Needham’s Visual Complex Analysis, p. […]", &c.

It’s been said that the real-world usefulness of LLM agents has been limited by low reliability impeding the horizon length of tasks: if the agent can only successfully complete a single step with probability 0.9, then its probability of succeeding on a task that requires ten correct steps in sequence is only 0.910 ≈ 0.35.

That was about how I felt with math. Prof. Schuster was assigning short horizon-length problems from Axler, which I could mostly do independently; Prof. Lai was assigning longer horizon-length problems from Stein and Shakarchi, which I mostly couldn’t. All the individual steps made sense once explained, but I could only generate so many steps before getting stuck.

If I were just trying to learn, the external help wouldn’t have seemed like a moral issue. I look things up all the time when I’m working on something I care about, but the institutional context of submitting an assignment for a grade seemed to introduce the kind of moral ambiguity that had made school so unbearable to me, in a way that didn’t feel fully mitigated by the transparent footnotes.

I told myself not to worry about it. The purpose of the “assignment” was to help us to learn about the theory of functions of a complex variable, and I was doing that. Prof. Lai had said in class and in office hours that he trusted us, that he trusted me. If I had wanted to avoid this particular source of moral ambiguity at all costs, but still wanted a Bachelor’s degree, I could have taken easier classes for which I wouldn’t need so much external assistance. (I didn’t even need the credits from this class to graduate.)

But that would be insane. The thing I was doing now, of jointly trying to maximize math knowledge while also participating in the standard system to help with that, made sense. Minimizing perceived moral ambiguity (which was all in my head) would have been a really stupid goal. Now, so late in life at age 37, I wanted to give myself fully over to not being stupid, even unto the cost of self-perceived moral ambiguity.

Prof. Lai eschewed in-person exams in favor of take-homes for both the midterm and the final. He said reasonable internet reference usage was allowed, as with the assignments. I didn’t ask for further clarification because I had already neurotically asked for clarification about the policy for the assignments once more than was necessary, but resolved to myself that for the take-homes, I would allow myself static websites but obviously no LLMs. I wasn’t a grade-grubber; I would give myself the authentic 2010s take-home exam experience and accept the outcome.

(I suspect Prof. Lai would have allowed LLMs on the midterm if I had asked—I didn’t get the sense that he yet understood the edge that the latest models offered over mere books and websites. On 29 April, a friend told me that instructors will increasingly just assume students are cheating with LLMs anyway; anything that showed I put thought in would be refreshing. I said that for this particular class and professor, I thought I was a semester or two early for that. In fact, I was two weeks early: on 13 May, Prof. Lai remarked before class and in the conference room during Prof. Schuster’s office hours that he had given a bunch of analysis problems to Gemini the previous night, and it got them all right.)

I got a 73/100 on my midterm. Even with the (static) internet, sometimes I would hit a spot where I got stuck and couldn’t get unstuck in a reasonable amount of time.

There were only 9 homework assignments during the semester (contrasted to 12 in “Measure and Integration”) to give us time to work on an expository paper and presentation on one of either the Gamma function, the Reimann zeta function, the prime number theorem, or elliptic functions. I wrote four pages on “Pinpointing the Generalized Factorial”, explaining the motivation of the Gamma function, except that I’m not fond of how the definition is shifted by one from what you’d expect, so I wrote about the unshifted Pi function instead.

I wish I had allocated more time to it. This was my one opportunity in my institutionalized math career to “write a paper” and not merely “complete an assignment”; it would have been vindicating to go over and above knocking this one out of the park. (Expository work had been the lifeblood of my non-institutionalized math life.) There was so much more I could have said about the generalized factorial, and applications (like the fractional calculus), but it was a busy semester and I didn’t get to it. It’s hardly an excuse that Prof. Lai wrote an approving comment and gave me full credit for those four pages.

I was resolved to do better on the take-home final than the take-home midterm, but it was a struggle. I eventually got everything, but what I submitted ended up having five footnotes to various math.stackexchange.com answers. (I was very transparent about my reasoning process; no one could accuse me of dishonesty.) For one problem, I ended up using formulas for the modulus of the derivative of a Blashke factor at 0 and the preimage of zero which I found in David C. Ulrich’s Complex Made Simple from the University library. It wasn’t until after I submitted my work that I realized that the explicit formulas had been unnecessary; the fact that they were inverses followed from the inverse function theorem.

Prof. Lai gave me 95/100 on my final, and an A in the course. I think he was being lenient with the points. Looking over the work I had submitted throughout the semester, I don’t think it would have been an A at Berkeley (or Princeton).

I guess that’s okay because grades aren’t real, but the work was real. If Prof. Lai had faced a dilemma between watering down either the grading scale or the course content in order to accomodate SFSU students being retarded, I’m glad he chose to preserve the integrity of the content.

“Modern Algebra I” (Spring 2025)

One of the quirks of being an autodidact is that it’s easy to end up with an “unbalanced” skill profile relative to what school authorities expect. As a student of mathematics, I consider myself more of an analyst than an algebraist and had not previously prioritized learning abstract algebra nor (what the school authorities cared about) “taking” an algebra “class”, neither the previous semester nor in Fall 2012/Spring 2013. (Over the years, I had taken a few desultory swings at Dummit & Foote, but had never gotten very far.) I thus found myself in Prof. Dusty Ross’s “Modern Algebra I” (“MATH 335”), the last “core” course I needed to graduate.

“Modern Algebra I” met on Monday, Wednesday, and Friday. All of my other classes met Tuesdays and Thursdays. I had wondered whether I could save myself a lot of commuting by ditching algebra most of the time, but started off the semester dutifully attending—and, as long as I was on campus that day anyway, also sitting in on Prof. Ross’s “Topology” (“MATH 450”) even though I couldn’t commit to a fourth math course for credit.

Prof. Ross is an outstanding schoolteacher, the best I encountered at SFSU. I choose my words here very carefully. I don’t mean he was my favorite professor. I mean that he was good at his job. His lectures were clear and well-prepared, and puncutated with group work on well-designed worksheets (pedogogically superior to the whole class just being lecture). The assignments and tests were fair, and son on.

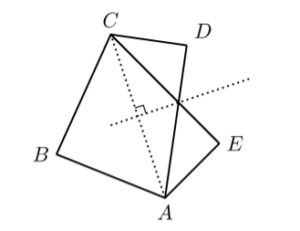

On the first day, he brought a cardboard square with color-labeled corners to illustrate the dihedral group. When he asked us how many ways there were to position the square, I said: eight, because the dihedral group for the n-gon has 2n elements. On Monday of the second week, Prof. Ross stopped me after class to express disapproval with how I had brought out my copy of Dummit & Foote and referred to Lagrange’s theorem during the group worksheet discussion about subgroups of cyclic groups; we hadn’t covered that yet. He also criticized my response about the dihedral group from the previous week; those were just words, he said. I understood the criticism that there’s a danger in citing results you or your audience might not understand, but resented the implication that knowledge that hadn’t been covered in class was therefore inadmissible.

I asked whether he cared whether I attended class, and he said that the answer was already in the syllabus. (Attendance was worth 5% of the grade.) After that, I mostly stayed home on Mondays, Wednesdays, and Fridays unless there was a quiz (and didn’t show up to topology again), which seemed like a mutually agreeable outcome to all parties.

Dusty Ross is a better schoolteacher than Alex Schuster, but in my book, Schuster is a better person. Ross believes in San Francisco State University; Schuster just works there.

The course covered the basics of group theory, with a little bit about rings at the end of the semester. The textbook was Joseph A. Gallian’s Contemporary Abstract Algebra, which I found to be in insultingly poor taste. The contrast between “Modern Algebra I” (“MATH 335”) and “Theory of Functions of a Complex Variable” (“MATH 730”) that semester did persuade me that the course numbers did have semantic content in their first digit (3xx = insulting, 4xx or cross-listed 4xx/7xx = requires effort, 7xx = potentially punishing).

I mostly treated the algebra coursework as an afterthought to the analysis courses I was devoting most of my focus to. I tried to maintain a lead on the weekly algebra assignments (five problems hand-picked by Prof. Ross, not from Gallian), submitting them an average of 5.9 days early—in the spirit of getting it out of the way. On a few assignments, I wrote some Python to compute orders of elements or cosets of permutation groups in preference to doing it by hand. One week I started working on the prequisite chapter on polynomial rings from the algebraic geometry book Prof. Ross had just written with his partner Prof. Emily Clader, but that was just to show off to Prof. Ross at office hours that I had at least looked at his book; I didn’t stick with it.

The Tutoring and Academic Support Center (TASC) offered tutoring for “Modern Algebra I”, so I signed up for weekly tutoring sessions with the TA for the class, not because I needed help to do well in the class, but it was nice to work with someone. Sometimes I did the homework, sometimes we talked about some other algebra topic (from Dummit & Foote, or Ross & Clader that one week), one week I tried to explain my struggles with measure theory. TASC gave out loyalty program–style punch cards that bribed students with a choice between two prizes every three tutoring sessions, which is as patronizing as it sounds, but wondering what the next prize options would be was a source of anticipation and mystery; I got a pen and a button and a tote bag over the course of the semester.

I posted a somewhat disappointing 79/90 (87.8%) on the final, mostly due to stupid mistakes or laziness on my part; I hadn’t prepped that much. Wracking my brain during a “Give an example of each the [sic] following” question on the exam, I was proud to have come up with the quaternions and “even-integer quaternions” as examples of noncommutative rings with and without unity, respectively.

He didn’t give me credit for those. We hadn’t covered the quaternions in class.

Not Sweating the Fake Stuff (Non-Math)

In addition to the gen-ed requirements that could be satisfied with transfer credits, there were also upper-division gen-ed requirements that had to be taken at SFSU: one class each from “UD-B: Physical and/or Life Sciences” (which I had satisfied with a ridiculous “Contemporary Sexuality” class in Summer 2012), “UD-C: Arts and/or Humanities”, and “UD-D: Social Sciences”. There was also an “Area E: Lifelong Learning and Self-Development” requirement, and four “SF State Studies” requirements (which overlapped with the UD- classes).

I try to keep it separate from my wholesome math and philosophy blogging, but at this point it’s not a secret that I have a sideline in gender-politics blogging. As soon as I saw the title in the schedule of classes, it was clear that if I had to sit through another gen-ed class, “Queer Literatures and Media” was the obvious choice. I thought I might be able to reuse some of my coursework for the blog, or if nothing else, get an opportunity to troll the professor.

The schedule of classes had said the course was to be taught by Prof. Deborah Cohler, so in addition to the listed required texts, I bought the Kindle version of her Citizen, Invert, Queer: Lesbianism and War in Early Twentieth-Century Britain, thinking that “I read your book, and …” would make an ideal office-hours icebreaker. There was a last-minute change: the course would actually be taught by Prof. Sasha Goldberg (who would not be using Prof. Cohler’s book list; I requested Kindle Store refunds on most of them).

I didn’t take the class very seriously. I was taking “Real Analysis II” and “Probability Models” seriously that semester, because for those classes, I had something to prove—that I could do well in upper-division math classes if I wanted to. For this class, the claim that “I could if I wanted to” didn’t really seem in doubt.

I didn’t not want to. But even easy tasks take time that could be spent doing other things. I didn’t always get around to doing all of the assigned reading or video-watching. I didn’t read the assigned segment of Giovanni’s Room. (And honestly disclosed that fact during class discussion.) I skimmed a lot of the narratives in The Stonewall Reader. My analysis of Carol (assigned as 250 words, but I wrote 350) used evidence from a scene in the first quarter of the film, because that was all I watched. I read the Wikipedia synopsis of They/Them instead of watching it. I skimmed part of Fun Home, which was literally a comic book that you’d expect me to enjoy. When Prof. Goldberg assigned an out-of-print novel (and before it was straightened out how to get it free online), I bought the last copy from AbeBooks with expedited shipping … and then didn’t read most of it. (I gave the copy to Prof. Goldberg at the end of the semester.)

My negligence was the source of some angst. If I was going back to school to “do it right this time”, why couldn’t I even be bothered to watch a movie as commanded? It’s not like it’s difficult!

But the reason I had come back was that I could recognize the moral legitimacy of a command to prove a theorem about uniform convergence. For this class, while I could have worked harder if I had wanted to, it was hard to want to when much of the content was so impossible to take seriously.

Asked to explain why the author of an article said that Halloween was “one of the High Holy Days for the gay community”, I objected to the characterization as implicitly anti-Semitic and homophobic. The High Holy Days are not a “fun” masquerade holiday the way modern Halloween is. The יָמִים נוֹרָאִים—yamim noraim, “days of awe”—are a time of repentance and seeking closeness to God, in which it is said that הַשֵּׁם—ha’Shem, literally “the name”, an epithet for God—will inscribe the names of the righteous in the Book of Life. Calling Halloween a gay High Holy Day implicitly disrespects either the Jews (by denying the seriousness of the Days of Awe), or the gays (by suggesting that their people are incapable of seriousness), or the reader (by assuming that they’re incapable of any less superficial connection between holidays than “they both happen around October”). In contrast, describing Halloween as a gay Purim would have been entirely appropriate. “They tried to genocide us; we’re still here; let’s have a masquerade party with alcohol” is entirely in the spirit of both Purim and Halloween.

I was proud of that answer (and Prof. Goldberg bought it), but it was the pride of coming up with something witty in response to a garbage prompt that had no other function than to prove that the student can read and write. I didn’t really think the question was anti-Semitic and homophobic; I was doing a bit.

Another assignment asked us to write paragraphs connecting each of our more theoretical course readings (such as Susan Sontag’s “Notes on Camp”, or an excerpt from José Esteban Muñoz’s Disidentifications: Queers of Color and the Performance of Politics) to Gordo, a collection of short stories about a gay Latino boy growing up in 1970s California. (I think Prof. Goldberg was concerned that students hadn’t gotten the “big ideas” of the course, such as they were, and wanted to give an assignment that would force us to re-read them.)

I did it, and did it well. (“[F]or example, Muñoz discusses the possibility of a queer female revolutionary who disidentifies with Frantz Fanon’s homophobia while making use of his work. When Nelson Pardo [a character in Gordo] finds some pleasure in American daytime television despite limited English fluency (”not enough to understand everything he is seeing“, p. 175), he might be practicing his own form of disidentification.”) But it took time out of my day, and it didn’t feel like time well spent.

There was a discussion forum on Canvas. School class forums are always depressing. No one ever posts in them unless the teacher makes an assignment of it—except me. I threw together a quick 1800-word post, “in search of gender studies (as contrasted to gender activism)”. It was clever, I thought, albeit rambling and self-indulgent, as one does when writing in haste. It felt like an obligation, to show the other schoolstudents what a forum could be and should be. No one replied.

I inquired about Prof. Goldberg’s office hours, which turned out to be directly before and after class, which conflicted with my other classes. (I gathered that Prof. Goldberg was commuting to SF State specifically to teach this class in an adjunct capacity; she more commonly taught at City College of San Francisco.) I ditched “Probability Models” lecture one day, just to talk with her about my whole deal. (She didn’t seem to approve of me ditching another class when I mentioned that detail.)

It went surprisingly well. Prof. Goldberg is a butch lesbian who, crucially, was old enough to remember the before-time prior to the hegemony of gender identity ideology, and seemed sympathetic to gentle skepticism of some of the newer ideas. She could grant that trans women’s womanhood was different from that of cis women, and criticized the way activists tend to glamorize suicide, in contrast to promoting narratives of queer resilience.

When I mentioned my specialization, she remarked that she had never had a math major among her students. Privately, I doubted whether that was really true. (I couldn’t have been the only one who needed the gen-ed credits.) But I found it striking for the lack of intellectual ambition it implied within the discipline. I unironically think you do need some math in order to do gender studies correctly—not a lot, just enough linear-algebraic and statistical intuition to ground the idea of categories as clusters in high-dimensional space. I can’t imagine resigning myself to such smallness, consigning such a vast and foundational area of knowledge to be someone else’s problem—or when I do (e.g., I can’t say I know any chemistry), I feel sad about it.

I was somewhat surprised to see Virginia Prince featured in The Stonewall Reader, which I thought was anachronistic: Prince is famous as the founder of Tri-Ess, the Society for the Second Self, an organization for heterosexual male crossdressers which specifically excluded homosexuals. I chose Prince as the subject for my final project/presentation.

Giving feedback on my project proposal, Prof. Goldberg wrote that I “likely got a master’s thesis in here” (or, one might think, a blog?), and that “because autogynephilia wasn’t coined until 1989, retroactively applying it to a subject who literally could not have identified in that way is inaccurate.” (I wasn’t writing about how Prince identified.)

During the final presentations, I noticed that a lot of students were slavishly mentioning the assignment requirements in the presentation itself: the rubric had said to cite two readings, two media selections, &c. from the course, and people were explicitly saying, “For my two course readings, I choose …” When I pointed out to the Prof. Goldberg that this isn’t how anyone does scholarship when they have something to say (you cite sources in order to support your thesis; you don’t say “the two works I’m citing are …”), she said that we could talk about methodology later, but that the assignment was what it was.

For my project, I ignored the presentation instructions entirely and just spent the two days after the Putnam exam banging out a paper titled “Virginia Prince and the Hazards of Noticing” (four pages with copious footnotes, mostly self-citing my gender-politics blog, in LyX with a couple of mathematical expressions in the appendix—a tradition from my community college days). For my presentation, I just had my paper on the screen in lieu of slides and talked until Prof. Goldberg said I was out of time (halfway through the second page).

I didn’t think it was high-quality enough to republish on the blog.

There was one day near the end of the semester when I remember being overcome with an intense feeling of sadness and shame and anger at the whole situation—at the contradiction between what I “should” have done to do well in the class, and what I did do. I felt both as if the contradiction was a moral indictment of me, and that the feeling that it was a moral indictment was a meta-moral indictment of moral indictment.

The feeling passed.

Between the assignments I had skipped and my blatant disregard of the final presentation instructions, I ended up getting a C− in the class, which is perhaps the funniest possible outcome.

“Philosophy of Animals” (Spring 2025)

I was pleased that the charmingly-titled “Philosophy of Animals” fit right into my Tuesday–Thursday schedule after measure theory and the theory of functions of a complex variable. It would satisfy the “UD-B: Physical/Life Science” and “SF State Studies: Environmental Sustainability” gen-ed requirements.

Before the semester, the Prof. Kimbrough Moore sent out an introductory email asking us to consider as a discussion question for our first session whether it is some sense contradictory for a vegetarian to eat oysters. I wrote a 630 word email in response (Subject: “ostroveganism vs. Schelling points (was:”Phil 392 - Welcome“)”) arguing that there are game-theoretic reasons for animal welfare advocates to commit to vegetarianism or veganism despite a prima facie case that oysters don’t suffer—with a postscript asking if referring to courses by number was common in the philosophy department.

The course, and Prof. Moore himself, were pretty relaxed. There were readings on animal consciousness and rights from the big names (Singer on “All Animals are Equal”, Nagel on “What Is It Like to Be a Bat?”) and small ones, and then some readings about AI at the end of course.

Homework was to post two questions about the readings on Canvas. There were three written exams, which Prof. Moore indicated was a new anti-ChatGPT measure this semester; he used to assign term papers.

Prof. Moore’s office hours were on Zoom. I would often phone in to chat with him about philosophy, or to complain about school. I found this much more stimulating than the lecture/discussion periods, which I started to ditch more often than not on Tuesdays in favor of Prof. Schuster’s office hours.

Prof. Moore was reasonably competent at his job; I just had trouble seeing why his job, or for that matter, the SFSU philosophy department, should exist.

In one class session, he mentioned offhand (in a slight digression from the philosophy of animals) that there are different types of infinity. By way of explaining, he pointed out that there’s no “next” decimal after 0.2 the way that there’s a next integer after 2. I called out that that wasn’t the argument. (The rationals are countable.) The same lecture, he explained Occam’s razor in a way that I found rather superficial. (I think you need Kolmogorov complexity or the minimum description length principle to do the topic justice.) That night, I sent him an email explaining the countability of the rationals and recommending a pictoral intuition pump for Occam’s razor due to David MacKay (Subject: “countability; and, a box behind a tree”).

In April, the usual leftist blob on campus had scheduled a “Defend Higher Education” demonstration to protest proposed budget cuts to the California State University system; Prof. Moore offered one point of extra credit in “Philosophy of Animals” for participating.

I was livid. Surely it would be a breach of professional conduct to offer students course credit for attending an anti-abortion or pro-Israel rally. Why should the school presume it had the authority to tell students to speak out in favor of more school? I quickly wrote Prof. Moore an email in complaint, suggesting that the extra credit opportunity be viewpoint-neutral: available to available to budget cut proponents (or those with more nuanced views) as well as opponents.

I added:

If I don’t receive a satisfactory response addressing the inappropriate use of academic credit to incentivize political activities outside the classroom by Thursday 17 April (the day of the protest), I will elevate this concern to Department Chair Landy. This timeline is necessary to prevent the ethical breach of students being bribed into bad faith political advocacy with University course credit.

I can imagine some readers finding this level of aggression completely inappropriate and morally wrong. Obviously, my outrage was performative in some sense, but it was also deeply felt—as if putting on a performance was the most sincere thing I could do under the circumstances.

It’s not just that it would be absurd to get worked up over one measly point of extra credit if there weren’t a principle at stake. (That, I would happily grant while “in character.”) It was that expecting San Francisco State University to have principles about freedom of conscience was only slightly less absurd.

It was fine. Prof. Moore “clarified” that the extra credit was viewpoint-neutral. (I was a little embarrassed not to have witnessed the verbal announcement in class on Tuesday, but I had already made plans to interview the campus machine-shop guy at that time instead of coming to class.) After having made a fuss, I was obligated to follow through, so I made a “BUDGET CUTS ARE PROBABLY OK!” sign (re-using the other side of the foamboard from an anti–designated hitter rule sign I had made for a recent National League baseball game) and held it at the rally on Thursday for ten minutes to earn the extra-credit point.

As for the philosophy of animals itself, I was already sufficiently well-versed in naturalist philosophy of mind that I don’t feel like I learned much of anything new. I posted 24/25 (plus a 2 point “curve” because SFSU students are illiterate), 21.5/25 (plus 4), and 22/25 (plus 2) on the three tests, and finished the semester at 101.5% for an A.

“Self, Place, and Knowing: An Introduction to Interdisciplinary Inquiry” (Spring 2025)

I was able to satisfy the “Area E: Lifelong Learning and Self-Development” gen-ed requirement with an asynchronous online-only class, Prof. Mariana Ferreira’s “Self, Place, and Knowing: An Introduction to Interdisciplinary Inquiry”. Whatever expectations I had of a lower-division social studies gen-ed class at San Francisco State University, this felt like a parody of that.

The first few weekly assignments were quizzes on given readings. This already annoyed me: in a synchronous in-person class, a “quiz” is typically closed-book unless otherwise specified. The purpose is to verify that the student did the reading. It would be a perversion of that purpose for the quiz-taker to read the question, and then Ctrl-F in the PDF to find the answer without reading the full text, but there was no provision for stopping that eventuality here.

The first quiz was incredibly poorly written: some of the answers were obvious just from looking at the multiple choice options, and some of them depended on minutiæ of the text that a typical reader couldn’t reasonably be expected to memorize. (The article quoted several academics in passing, and then the quiz had a question of the form “[name] at [university] expresses concerns about:”.) I took it closed-book and got 7/10.

I posted a question on the class forum asking for clarification on the closed-book issue, and gently complaining about the terrible questions (Subject: “Are the quizzes supposed to be ‘open book’? And, question design”). No one replied; I was hoping Prof. Ferreira kept an eye on the forum. I could have inquired with her more directly, but the syllabus said Zoom office hours were by appointment only at 8 a.m. Tuesdays—just when I was supposed to be out the door to be on time for “Measure and Integration.” I didn’t bother.

You might question why I even bothered to ask on the forum, given my contempt for grade-grubbing: I could just adhere to a closed-book policy unilaterally and eat the resulting subpar scores. But I had noticed that my cumulative GPA was sitting at 3.47 (down from 3.49 in Spring 2013 because of that C− in “Queer Literatures and Media” last semester), and 3.5 would classify my degree as cum laude. Despite everything, I think I did want an A in “Self, Place, and Knowing”, and my probability of getting an A was lower if I handicapped myself with moral constraints perceived by myself and probably not anyone else.

I also did the next two quizzes closed book—except that on the third quiz, I think I succumbed to the temptation to peek at the PDF once, but didn’t end up changing my answer as the result of the peek. Was that contrary to the moral law? Was this entire endeavor of finishing the degree now morally tainted by that one moment, however inconsequential it was to any outcome?

I think part of the reason I peeked was because, in that moment, I was feeling doubtful that the logic of “the word ‘quiz’ implies closed-book unless otherwise specified” held any force outside of my own head. Maybe “quiz” just meant “collection of questions to answer”, and it was expected that students would refer back to the reading while completing it. The syllabus had been very clear about LLM use being plagiarism, despite how hard that was to enforce. If Prof. Ferreira had expected the quizzes to be closed book on the honor system, wouldn’t she have said that in the syllabus, too? The fact that no one had shown any interest in clarifying what the rules were even after I had asked in the most obvious place, suggested that no one cared. I couldn’t be in violation of the moral law if “Self, Place, and Knowing” was not a place where the moral law applied.

It turned out that I needn’t have worried about my handicapped quiz scores (cumulative 32/40 = 80%) hurting my chances of making cum laude. Almost all of the remaining assignments were written (often in the form of posts to the class forum, including responses to other students), and Prof. Ferreira awarded full or almost-full credit for submissions that met the prescribed wordcount and made an effort to satisfy the (often unclear or contradictory) requirements.

Despite the syllabus’s warnings, a few forum responses stuck out to me as having the characteristic tells of being written by an LLM assistant. I insinuated my suspicions in one of my replies to other classmates:

I have to say, there’s something striking about your writing style in this post, and even more so your comments of Ms. Williams’s and Ms. Mcsorley’s posts. The way you summarize and praise your classmates’ ideas has a certain personality to it—somehow I imagine the voice of a humble manservant with a Nigeran accent (betraying no feelings of his own) employed by a technology company, perhaps one headquartered on 18th Street in our very city. You simply must tell us where you learned to write like that!

I felt a little bit nervous about that afterwards: my conscious intent with the “Nigerian manservant” simile was to allude to the story about ChatGPT’s affinity for the word delve being traceable to the word’s prevalence among the English-speaking Nigerians that OpenAI employed as data labelers, but given the cultural milieu of an SFSU social studies class, I worried that it would be called out as racist. (And whatever my conscious intent, maybe at some level I was asking for it.)

I definitely shouldn’t have worried. Other than the fact that Prof. Ferreira gave me credit for the assignment, I have no evidence that any human read what I wrote.

My final paper was an exercise in bullshit and malicious compliance: over the course of an afternoon and evening (and finishing up the next morning), I rambled until I hit the wordcount requirement, titling the result, “How Do Housing Supply and Community Assets Affect Rents and Quality of Life in Census Tract 3240.03? An Critical Microeconomic Synthesis of Self, Place, and Knowing”. My contempt for the exercise would have been quite apparent to anyone who read my work, but Prof. Ferreira predictably either didn’t read it or didn’t care. I got my A, and my Bachelor of Arts in Mathematics (Mathematics for Liberal Arts) cum laude.

Cynicism and Sanity

The satisfaction of finally finishing after all these years was tinged with grief. Despite the manifest justice of my complaints about school, it really hadn’t been that terrible—this time. The math was real, and I suppose it makes sense for some sort of institution to vouch for people knowing math, rather than having to take people’s word for it.

So why didn’t I do this when I was young, the first time, at Santa Cruz? I could have majored in math, even if I’m actually a philosopher. I could have taken the Putnam (which is just offered at UCSC without a student needing to step up to organize). I could have gotten my career started in 2010. It wouldn’t have been hard except insofar as it would have involved wholesome hard things, like the theory of functions of a complex variable.

What is a tragedy rather than an excuse is, I hadn’t known how, at the time. The official story is that the Authority of school is necessary to prepare students for “the real world”. But the thing that made it bearable and even worthwhile this time is that I had enough life experience to treat school as part of the real world that I could interact with on my own terms, and not any kind of Authority. The incomplete contract was an annoyance, not a torturous contradiction in the fabric of reality.

In a word, what saved me was cynicism, except that cynicism is just naturalism about the properties of institutions made out of humans. The behavior of the humans is in part influenced by various streams of written and oral natural language instructions from various sources. It’s not surprising that there would sometimes be ambiguity in some of the instructions, or even contradictions between different sources of instructions. As an agent interacting with the system, it was necessarily up to me to decide how to respond to ambiguities or contradictions in accordance with my perception of the moral law. The fact that my behavior in the system was subject to the moral law, didn’t make the streams of natural language instructions themselves an Authority under the moral law. I could ask for clarification from a human with authority within the system, but identifying a relevant human and asking had a cost; I didn’t need to ask about every little detail that might come up.

Cheating on a math test would be contrary to the moral law: it feels unclean to even speak of it as a hypothetical possibility. In contrast, clicking through an anti-sexual-harrassment training module as quickly as possible without actually watching the video was not contrary to the moral law, even though I had received instructions to do the anti-sexual-harrassment training (and good faith adherence to the instructions would imply carefully attending to the training course content). I’m allowed to notice which instructions are morally “real” and which ones are “fake”, without such guidance being provided by the instructions themselves.

I ended up getting waivers from Chair Hsu for some of my UCSC credits that the computer system hadn’t recognized as fulfilling the degree requirements. I told myself that I didn’t need to neurotically ask followup questions about whether it was “really” okay that (e.g.) my converted 3.3 units of linear algebra were being accepted for a 4-unit requirement. It was Chair Hsu’s job to make his own judgement call as to whether it was okay. I would have been agreeable to take a test to prove that I know linear algebra—but realistically, why would Hsu bother to have someone administer a test rather than just accept the UCSC credits? It was fine; I was fine.

I remember that back in 2012, when I was applying to both SF State and UC Berkeley as a transfer student from community college, the application forms had said to list grades from all college courses attempted, and I wasn’t sure whether that should be construed to include whatever I could remember about the courses from a very brief stint at Heald College in 2008, which I didn’t have a transcript for because I had quit before finishing a single semester without receiving any grades. (Presumably, the intent of the instruction on the forms was to prevent people from trying to elide courses they did poorly in at the institution they were transferring from, which would be discovered anyway when it came time to transfer credits. Arguably, the fact that I had briefly tried Heald and didn’t like it wasn’t relevant to my application on the strength of my complete DVC and UCSC grades.)

As I recall, I ended up listing the incomplete Heald courses on my UC Berkeley application (out of an abundance of moral caution, because Berkeley was actually competitive), but not my SFSU application. (The ultimate outcome of being rejected from Berkeley and accepted to SFSU would have almost certainly been the same regardless.) Was I following morally coherent reasoning? I don’t know. Maybe I should have phoned up the respective admissions offices at the time to get clarification from a human. But the possibility that I might have arguably filled out a form incorrectly thirteen years ago isn’t something that should turn the entire endeavor into ash. The possibility that I might have been admitted to SFSU on such “false pretenses” is not something that any actual human cares about. (And if someone does, at least I’m telling the world about it in this blog post, to help them take appropriate action.) It’s fine; I’m fine.

When Prof. Mujamdar asked us to bring our laptops for the recitation on importance sampling and I didn’t feel like lugging my laptop on BART, I just did the work at home—in Rust—and verbally collaborated with a classmate during the recitation session. I didn’t ask for permission to not bring the laptop, or to use Rust. It was fine; I was fine.

In November 2024, I had arranged to meet with Prof. Arek Goetz “slightly before midday” regarding the rapidly approaching registration deadline for the Putnam competition. I ducked out of “Real II” early and knocked on his office door at 11:50 a.m., then waited until 12:20 before sending him an email on my phone and proceeding to my 12:30 “Queer Literatures and Media” class. While surreptitiously checking my phone during class, I saw that at 12:38 p.m., he emailed me, “Hello Zack, I am in the office, not sure if you stopped by yet…”. I raised my hand, made a contribution to the class discussion when Prof. Goldberg called on me (offering Seinfeld’s “not that there’s anything wrong with that” episode as an example of homophobia in television), then grabbed my bag and slipped out while she had her back turned to the whiteboard. Syncing up with Prof. Goetz about the Putnam registration didn’t take long. When I got back to “Queer Literatures and Media”, the class had split up into small discussion groups; I joined someone’s group. Prof. Goldberg acknowledged my return with a glance and didn’t seem annoyed.

Missing parts of two classes in order to organize another school activity might seem too trivial of an anecdote to be worth spending wordcount on, but it felt like a significant moment insofar as I was applying a wisdom not taught in schools, that you can just do things. Some professors would have considered it an affront to just walk out of a class, but I hadn’t asked for permission, and it was fine; I was fine.

In contrast to my negligence in “Queer Literatures and Media”, I mostly did the reading for “Philosophy of Animals”—but only mostly. It wasn’t important to notice or track if I missed an article or skimmed a few pages here and there (in addition to my thing of cutting class in favor of Prof. Schuster’s office hours half the time). I engaged with the material enough to answer the written exam questions, and that was the only thing anyone was measuring. It was fine; I was fine.

I was fine now, but I hadn’t been fine at Santa Cruz in 2007. The contrast in mindset is instructive. The precipitating event of my whole anti-school crusade had been the hysterical complete mental breakdown I had after finding myself unable to meet pagecount on a paper for Prof. Bettina Aptheker’s famous “Introduction to Feminisms” course.

It seems so insane in retrospect. As I demonstrated with my malicious compliance for “Self, Place, and Knowing”, writing a paper that will receive a decent grade in an undergraduate social studies class is just not cognitively difficult (even if Prof. Aptheker and the UCSC of 2007 probably had higher standards than Prof. Ferreira and the SFSU of 2025). I could have done it—if I had been cynical enough to bullshit for the sake of the assignment, rather than holding myself to the standard of writing something I believed and having a complete mental breakdown rather than confront the fact that I apparently didn’t believe what I was being taught in “Introduction to Feminisms.”

I don’t want to condemn my younger self entirely, because the trait that made me so dysfunctional was a form of integrity. I was right to want to write something I believed. It would be wrong to give up my soul to the kind of cynicism that scorns ideals themselves, rather than the kind than scorns people and institutions for not living up to the ideals and lying about it.

Even so, it would have been better for everyone if I had either bullshitted to meet the pagecount, or just turned in a too-short paper without having a total mental breakdown about it. The total mental breakdown didn’t help anyone! It was bad for me, and it imposed costs on everyone around me.

I wish I had known that the kind of integrity I craved could be had in other ways. I think I did better for myself this time by mostly complying with the streams of natural language instructions, but not throwing a fit when I didn’t comply, and writing this blog post afterwards to clarify what happened. If anyone has any doubts about the meaning of my Bachelor of Arts in Mathematics for Liberal Arts from San Francisco State University, they can read this post and get a pretty good idea of what that entailed. I’ve put in more than enough effort into being transparent that it doesn’t make sense for me to be neurotically afraid of accidentally being a fraud.

I think the Bachelor of Arts in Mathematics does mean something, even to me. It can simultaneously be the case that existing schools are awful for the reasons I’ve laid out, and that there’s something real about some parts of them. Part of the tragedy of my story is that having wasted too much of my life in classes that were just obedience tests, I wasn’t prepared to appreciate the value of classes that weren’t just that. If I had known, I could have deliberately sought them out at Santa Cruz.

I think I’ve latched on to math as something legible enough and unnatural enough (in contrast to writing) that the school model is tolerable. My primary contributions to the world are not as a mathematician, but if I have to prove my intellectual value to Society in some way that doesn’t depend on people intimately knowing my work, this is a way that makes sense, because math is too difficult and too pure to be ruined by the institution. Maybe other subjects could be studied in school in a way that’s not fake. I just haven’t seen it done.

There’s also a sense of grief and impermanence about only having my serious-university-math experience in the GPT-4 era rather than getting to experience it in the before-time while it lasted. If I didn’t have LLM tutors, I would have had to be more aggressive about collaborating with peers and asking followup questions in office hours.

My grudging admission that the degree means something to me should not be construed as support for credentialism. Chris Olah never got his Bachelor’s degree, and anyone who thinks less of him because of that is telling on themselves.

At the same time, I’m not Chris Olah. For those of us without access to the feedback loops entailed by a research position at Google Brain, there’s a benefit to being calibrated about the standard way things are done. (Which, I hasten to note, I could in principle have gotten from MIT OpenCourseWare; my accounting of benefits from happening to finish college is not an admission that the credentialists were right.) Obviously, I knew that math is not a spectator sport: in the years that I was filling my pages of notes from my own textbooks, I was attempting exercises and not just reading (because just reading doesn’t work). But was I doing enough exercises, correctly, to the standard that would be demanded in a school class, before moving on to the next shiny topic? It’s not worth the effort to do an exhaustive audit of my 2008–2024 private work, but I think in many cases, I was not. Having a better sense of what the mainstream standard is will help me adjust my self-study practices going forward.

When I informally audited “Honors Introduction to Analysis” (“MATH H104”) at UC Berkeley in 2017, Prof. Charles C. Pugh agreed to grade my midterm, and I got a 56/100. I don’t know what the class’s distribution was. Having been given to understand that many STEM courses offered a generous curve, I would later describe it as me “[doing] fine on the midterm”. Looking at the exam paper after having been through even SFSU’s idea of an analysis course, I think I was expecting too little of myself: by all rights, a serious analysis student in exam shape should be able to prove that the minimum distance between a compact and a closed set is achieved by some pair of points in the sets, or that the product of connected spaces is connected (as opposed to merely writing down relevant observations that fell short of a proof, as I did).

In a July 2011 Diary entry, yearning to finally be free of school, I fantasized about speedrunning SF State’s “advanced studies” track in two semesters: “Six classes a semester sounds like a heavy load, but it won’t be if I study some of the material in advance,” I wrote. That seems delusional now. That’s not actually true of real math classes, even if it were potentially true of “Self, Place, and Knowing”-tier bullshit classes.

It doesn’t justify the scourge of credentialism, but the fact that I was ill-calibrated about the reality of the mathematical skill ladder helps explain why the coercion of credentialism is functional, why the power structure survives instead of immediately getting competed out of existence. As terrible as school is along so many dimensions, it’s tragically possible for people to do worse for themselves in freedom along some key dimensions.

There’s a substantial component of chance in my coming to finish the degree. The idea presented itself to me in early 2024 while I was considering what to work on next after a writing project had reached a natural stopping point. People were discussing education and schooling on Twitter in a way that pained me, and it occurred to me that I would feel better about being able to criticize school from the position of “… and I have a math degree” rather than “… so I didn’t finish.” It seemed convenient enough, so I did it.

But a key reason it seemed convenient enough is that I still happened to live within commuting distance of SF State. That may be more due to inertia than anything else; when I needed to change apartments in 2023, I had considered moving to Reno, NV, but ended up staying in the East Bay because it was less of a hassle. If I had fled to Reno, then transferring credits and finishing the degree on a whim at the University of Nevada–Reno would have been less convenient. I probably wouldn’t have done it—and I think it was ultimately worth doing.

The fact that humans are such weak general intelligences that so much of our lives come down to happenstance, rather than people charting an optimal path for themselves, helps explain why there are institutions that shunt people down a standard track with a known distribution of results. I still don’t like it, and I still think people should try to do better for themselves, but it seems somewhat less perverse now.

Afterwards, Prof. Schuster encouraged me via email to at least consider grad school, saying that I seemed comparable to his peers in the University of Michigan Ph.D. program (which was ranked #10 in the U.S. at that time in the late ’90s). I demurred: I said I would consider it if circumstances were otherwise, but in contrast to the last two semesters to finish undergrad, grad school didn’t pass a cost-benefit analysis.

(Okay, I did end up crashing Prof. Clader’s “Advanced Topics in Mathematics: Algebraic Topology” (“MATH 790”) the following semester, and she agreed to grade my examinations, on which I got 47/50, 45/50, 46/50, and 31/50. But I didn’t enroll.)

What was significant (but not appropriate to mention in the email) was that now the choice to pursue more schooling was a matter of cost–benefit analysis, and not a prospect of torment or betrayal of the divine.

I wasn’t that crazy anymore.